Microsoft Azure Event Hubs

Azure Event Hubs is a big data streaming platform and event ingestion service from Microsoft. Data sent to an event hub can be transformed and stored by using any real-time analytics provider or batching/storage adapters.

NXLog Agent can be configured to send data to Azure Event Hubs via the Kafka and HTTP protocols using the om_kafka and om_http modules. NXLog Agent can also receive log data from Azure Event Hubs via the Kafka protocol using the im_kafka module.

Kafka requires at least a Standard Tier, while HTTP works with any tier. For more information on tiers, see the What is the difference between Event Hubs Basic and Standard tiers? section in the Microsoft documentation. With both methods, an SAS (Shared Access Signature) is used for authentication.

Configuring Event Hubs

To forward and retrieve logs from Azure Event Hubs, an Azure account with an appropriate subscription is required.

Once signed in to Azure, a resource group an Event Hubs namespace and an event hub need to be created. The names of these will be required when configuring NXLog Agent.

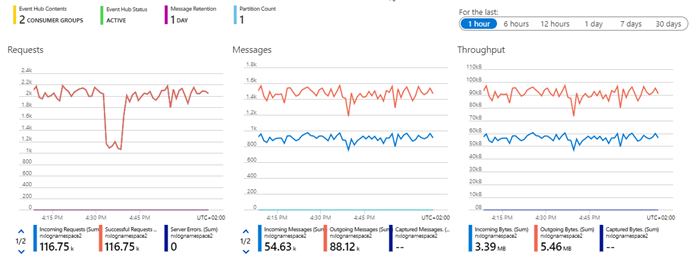

With all of the above created, the event hub can be found by navigating to the Home > Event Hubs > <YOURNAMESPACE> > Event Hubs page in the Azure portal. This page lists some basic details about the event hub as well as graphs of the data flow. In addition, the left side panel serves as a control panel for managing your event hub.

Configure NXLog Agent to send data with Kafka (om_kafka)

NXLog Agent can forward logs to an Event Hub via the Kafka protocol.

In order to configure NXLog Agent you need the following details:

-

The entry for the

BrokerListdirective. This is derived from the name of the namespace and a fixed URL with a port number. It looks like:<YOURNAMESPACE>.servicebus.windows.net:9093. The namespace needs to be changed to match your environment. -

The name of the event hub created in Azure for the

Topicdirective. -

The name of your resource group configured with the

Optiondirective. Read more about What is a resource group in the Microsoft Documentation. -

Either your primary key or your secondary key will be needed per the instructions in Get an Event Hubs connection string for retrieving the connection string defined in an

Optiondirective as a SASL password. -

A CA certificate, even though it is not listed as a requirement by Azure Event Hubs.

In this configuration the logs are forwarded to Azure Event Hubs by the om_kafka module.

<Output out>

Module om_kafka

BrokerList YOURNAMESPACE.servicebus.windows.net:9093

Topic YOUREVENTHUB

Option security.protocol SASL_SSL

Option group.id <YOURCONSUMERGROUP>

Option sasl.mechanisms PLAIN

Option sasl.username $ConnectionString

Option sasl.password <YOUR Connection string–primary key>

CAFile C:\Program Files\nxlog\cert\<ca.pem>

</Output>Configure NXLog Agent to send data with HTTP (om_http)

NXLog Agent can forward its collected logs to Azure Event Hubs via the HTTP protocol.

To configure NXLog Agent you need the following details:

-

A shared access signature (SAS) token. The Microsoft documentation lists various scripts and methods to generate a SAS token. For the example below, the PowerShell example were used. None of the other methods or scripts were tested.

-

Entries for the

URLdirective and the Host HTTP header set by theAddHeaderdirective require the name of the namespace you have created.

| The PowerShell example can be executed in the Azure Cloud Shell using the Try it button. |

The om_http module also supports sending logs in batches by

defining the BatchMode directive. The accepted

values for this directive are multiline and multipart, however Azure Event

Hubs can only process logs sent with the multiline batching method.

In this configuration logs are sent to Azure Event Hubs using the om_http

module with BatchMode switched on.

<Output out>

Module om_http

BatchMode multiline

URL https://<YOURNAMESPACE>.servicebus.windows.net/nxlogeventhub/messages

HTTPSCAFile C:\cacert.pem

AddHeader Authorization: <YOURSASTOKEN>

AddHeader Content-Type: application/atom+xml;type=entry;charset=utf-8

AddHeader Host: <YOURNAMESPACE>.servicebus.windows.net

</Output>Confirm data reception

There are several ways to confirm data reception in Azure Event Hubs.

The easiest was to look at it is to browse to the Home > Event Hubs > <YOURNAMESPACE> > Event Hubs page in the Azure portal where Microsoft provides a chart which displays incoming and outgoing message counts as well as event throughput metrics.

Logs forwarded to Azure Event Hubs by NXLog Agent can also be collected using the im_kafka module. The logs collected with this method are identical to the ones sent to Azure Event Hubs.

This configuration uses the same settings as the om_kafka configuration in the first example. The only difference is the direction of the log flow. This configuration collects the logs and writes them to a file.

<Input in>

Module im_kafka

BrokerList nxlognamespace.servicebus.windows.net:9093

Topic nxlogeventhub

Option security.protocol SASL_SSL

Option group.id nxlogconsumergroup

Option sasl.mechanisms PLAIN

Option sasl.username $ConnectionString

Option sasl.password <Connection string–primary key>

CAFile C:\Program Files\nxlog\cert\ca.pem

</Input>

<Output file>

Module om_file

File "C:\\logs\\logmsg.txt"

</Output>In addition to the above, Azure Event Hubs provides facilities to feed data into Azure’s own storage technologies such as Azure Blob Storage and Azure Data Lake Storage where data reception can also be confirmed. However, these methods are outside of the scope of this document.

Data throughput considerations

| This section is for informational purposes only. |

When deciding on which method to use for sending logs to Azure Event Hubs, performance, throughput, and size can all be important and decisive factors. It is important to note that when measuring throughput, the performance of a system depends on several factors including, but not limited to:

-

The performance and resource availability of the node which NXLog Agent runs on.

-

The performance and capability of any networking equipment between MS Azure Event Hubs and the machine NXLog Agent runs on.

-

The quality of service provided by your ISP. This includes bandwidth restrictions as well.

-

Your geographic location and how you set up Azure Event Hubs.

-

The number of throughput units you have purchased with your Azure Event Hubs subscription.

In addition, it is worth considering which tier to use as Kafka requires a more expensive subscription as it is not available in the Basic Tier according to the Event Hubs pricing.

In our tests we have used a single data throughput unit generating data with the Test Generator (im_testgen) module and concluded that both Kafka and HTTP works reliably, but HTTP offers better throughput, especially with batching.

In any case, we strongly recommend thorough testing in your environment.